Industry Coding Framework Explained: Standards, Benefits & Best Practices

Think “coding framework” and you might imagine a checklist of libraries, lint rules, and CI badges. In hiring and engineering practice, the part that actually matters is much less boring and a lot more practical: an Industry Coding Framework (ICF) is a validated, project-style assessment that simulates real feature work, measures engineering judgment, and produces auditable evidence you can act on. This rewrite folds in comparison to CodeSignal’s General Coding Assessment (GCA), explicit level guidance, candidate prep tips, and policy/metric details so both hiring teams and candidates walk away ready to use the framework.

What ICF is used for

An Industry Coding Framework is a tool for making hiring and development decisions more objective, job-relevant, and defensible. Teams use ICFs to simulate day-one work, compare candidates against the same rubric, surface skill gaps across cohorts, and feed evidence into hiring, onboarding, and L&D workflows. In short: an ICF turns everyday engineering work into structured, auditable evidence that hiring teams and managers can act on. Practical uses of an ICF include:

- Hiring & selection: Replace or complement algorithmic screens with a multi-level work simulation that shows how candidates design, evolve, and deliver a feature.

Example: shorten interview funnels by using ICF pass/fail + a targeted live interview. - Benchmarking & calibration: Create consistent role-level expectations (junior → senior) and norm graders with anchor responses so scoring is comparable across teams.

Example: map Level 2 - mid-level backend expectations for your stack. - Onboarding & role fit: Use ICF outputs to tailor new-hire learning plans and identify early mentors or pairing partners.

Example: route candidates who scored lower on testability to a focused testing curriculum. - Learning & development: Aggregate rubric results to reveal org-level skill gaps and build targeted training modules.

Example: run quarterly reports that show declining test coverage skills and launch a 6-week course. - Compliance & risk control: Embed domain checkpoints (e.g., audit trails, PHI handling) to reduce regulatory surprises in regulated products.

- EEO / fairness & defensibility: Structured rubrics and cross-marking reduce subjectivity and support hiring decisions with auditable evidence.

What is an Industry Coding Framework (ICF) - plain English

An ICF bundles three things:

- A work-simulation: a domain-agnostic project that unfolds across progressive levels (usually four), mirroring how real features evolve.

- A shared vocabulary & rubric: agreed design patterns, quality attributes, and scoring rules so different graders reach consistent conclusions.

- Operational rules: timeboxes, language-agnostic constraints, proctoring or anti-cheat measures, and exportable reporting for hiring analytics.

A well-adopted example is CodeSignal’s ICF-style evaluation: one project, four progressive levels, a ~90-minute single-session cap, support for multiple languages, and a standard scoring mapping used by hiring teams.

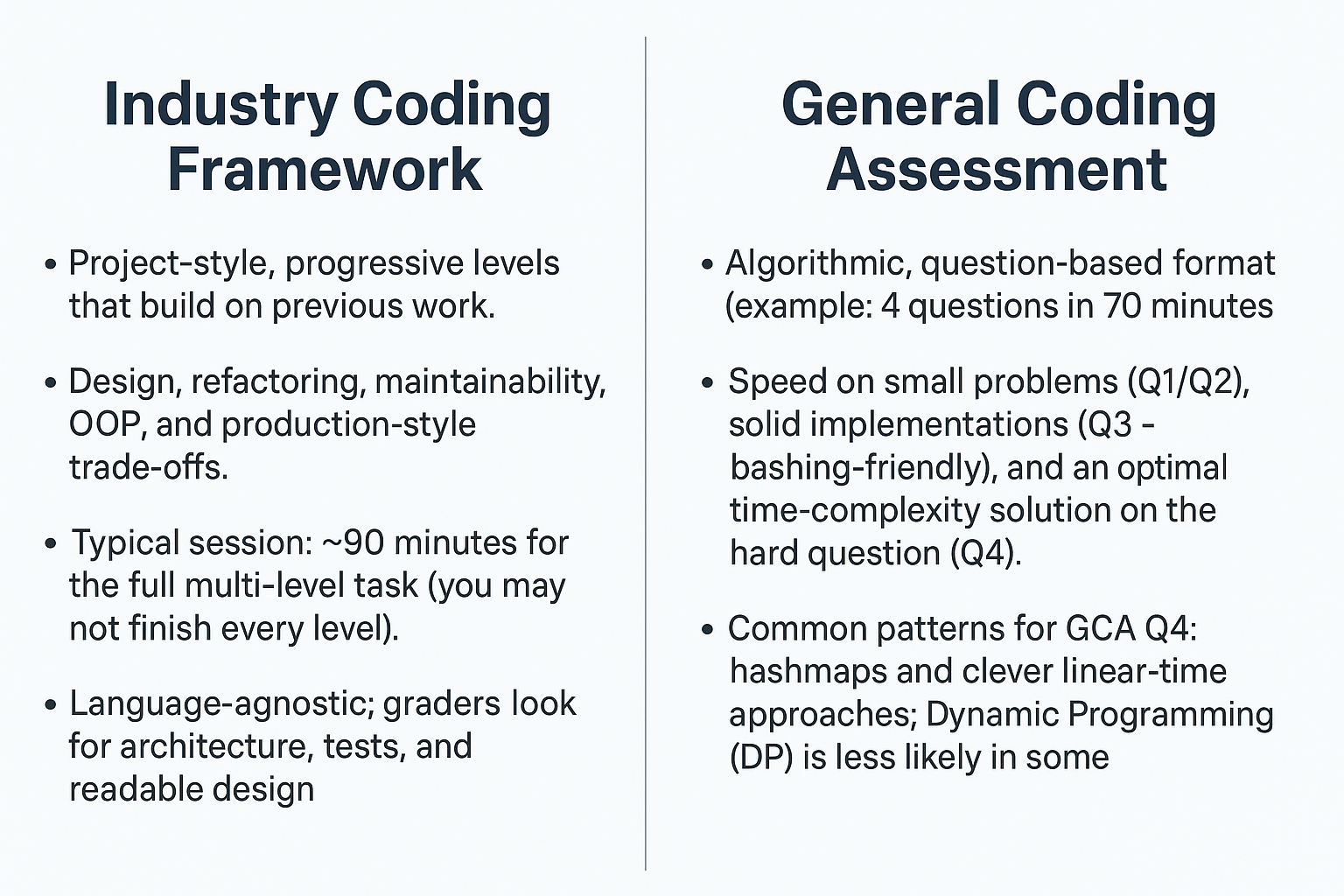

ICF vs. GCA - quick comparison

Industry Coding Framework (ICF)

- Project-style, progressive levels that build on previous work.

- Emphasizes design, refactoring, maintainability, OOP, and production-style trade-offs.

- Typical session: ~90 minutes for the full multi-level task (you may not finish every level).

- Language-agnostic; graders look for architecture, tests, and readable design.

General Coding Assessment (GCA)

- Algorithmic, question-based format (example: 4 questions in 70 minutes).

- Emphasizes speed on small problems (Q1/Q2), solid implementations (Q3 - bashing-friendly), and an optimal time-complexity solution on the hard question (Q4).

- Common patterns for GCA Q4: hashmaps and clever linear-time approaches; Dynamic Programming (DP) is less likely in some GCA variants.

Both are valuable: ICF shows how you build and evolve real systems; GCA tests algorithmic reasoning and speed. Hiring teams often use them together.

Why trust an ICF-style framework?

High-quality frameworks earn trust through multiple authority signals:

- SME authorship & review: senior engineers and architects write and validate tasks so they reflect real trade-offs (Domain Model vs. Transaction Script, when to refactor, etc.).

- Alignment to canonical patterns: frameworks map evaluation criteria to time-tested literature (SOLID, Fowler, Gang of Four).

- Regulatory awareness: for healthcare/finance, rubrics include compliance checkpoints (audit trails, PHI handling).

- Published validation: the strongest vendors publish briefs describing item-writing, fairness testing, and score mapping.

Crucially, a good ICF does not just check boxes. It tests the engineering habits that predict long-term success: readable abstractions, testable modules, and pragmatic trade-offs.

The building blocks: what graders actually look for

Expect the framework to test both what you build and how you reason about it.

Canonical patterns & architectures

- MVC, Service Layer, Repository/Data Mapper, Unit of Work, Domain Model. Graders look for appropriate use, not dogma.

Core principles

- SOLID, Dependency Injection, Separation of Concerns, DRY, KISS - evidence that candidates prefer maintainability over clever hacks.

For a practical, hands-on implementation of Dependency Injection in Node.js + TypeScript (InversifyJS examples, IoC container wiring, and testing patterns), see our guide: Dependency Injection in NodeJS TypeScript.

Non-functional attributes

- Scalability, availability, security, auditability. In regulated contexts, a candidate who includes an audit trail or secure storage mechanism will score higher on compliance rubrics.

ICF Level progression (how a single project unfolds)

.png)

Most ICF tasks are split into four progressive levels that simulate iterative feature work:

- Level 1: Initial design & basic functions (10–15 minutes expected)

Small, self-contained implementation: data modeling, basic API surface, and a few unit tests. (~10–30 LOC typical for this level.) - Level 2: Data processing & core logic

Larger data transformations, edge-case handling, and more test cases. Expect to add data structures and pass additional tests. - Level 3: Refactoring & encapsulation

Candidate refactors existing code, extracts modules, applies OOP/design patterns, and improves test coverage. This level separates candidates who can evolve code from those who only write fresh implementations. - Level 4: Extending design & final features (largest scope)

Add features, integrate cross-cutting concerns (e.g., error handling, configuration), and ensure backward-compatibility. Cumulative LOC across levels might reach ~90–160 LOC depending on the task.

These levels test incremental engineering: you’re judged not only on final output but on how you adapt previous work - a close simulation of day-to-day software development.

Practical metrics and policy (what organizations measure)

When evaluating frameworks and communicating to candidates, be explicit about these measurable data points:

- Time-boxing: ICF-style tasks often use a 90-minute cap for the full project; GCA-type tests commonly use 70 minutes for four questions. Setting expectations reduces candidate anxiety and improves fairness.

- Scoring: Many vendors map outcomes to a standard numeric scale (example: 200–600 for certain Skills Evaluation outputs). Scores may be derived from partial credit across levels and rubric checkpoints.

- Partial credit & grading: Frameworks commonly award partial credit for passing tests, good design choices, and incremental progress — not just perfect final solutions.

- Retake and validity: Typical retake policies and result validity windows differ by vendor. Example policies you may see: limited retakes (e.g., max two in 30 days, max three in 6 months) and result-age rules for hiring pipelines. Always publish your vendor’s retake rules and the date of the scoring schema you rely on.

- Code volume guidance: Provide reasonable LOC and feature expectations (e.g., small-level implementations vs. Level 4 cumulative lines) so candidates know what “done” looks like.

(Organizations: record the date of your vendor’s scoring schema so you can interpret historical results consistently.)

Benefits for organizations - measurable upside

Adopting a validated ICF approach produces measurable returns:

- Faster, fairer hiring: Work-simulations reduce time-to-hire by focusing on demonstrated ability, not pedigree.

- Better role-fit: Role-specific modules align hiring to true day-one responsibilities (API-heavy vs. UI-heavy roles).

- Lower compliance risk: Embedding compliance prompts reduces downstream legal surprises in regulated products.

- Less bias: Structured rubrics and cross-marking improve EEOC defensibility.

- Actionable L&D signals: Aggregated results reveal org-level skill gaps and guide upskilling investments.

Quantifying exact ROI varies by company context, but teams report faster ramp, fewer rehires, and improved hiring confidence when ICF outputs are used beyond simple pass/fail.

Best practices: rollouts for teams and prep tips for candidates

For hiring teams: a practical rollout plan

- Align stakeholders (2 weeks) - gather senior engineers, hiring managers, and compliance SMEs; define role outcomes at 3/6 months.

- Adopt a validated framework (don’t invent) - a public framework brief saves months of psychometric work.

- Map to job families (1 week) - decide which framework parts map to backend, frontend, full-stack, platform.

- Configure environment & timeboxes (ongoing) - use language-agnostic tasks for systems thinking; add stack-specific modules for role fit.

- Train graders & calibrate (2–4 weeks) - norm graders with anchor responses and cross-marking.

- Use outputs beyond pass/fail - route aggregated gaps into training, pairing, and mentorship.

- Version & revisit annually - publish change notes as tech and compliance expectations shift.

For candidates: pragmatic prep & execution (ICF and GCA tips)

- Practice the format: Try 90-minute small projects that include an API, persistence, tests, and a README.

- Plan before coding: Spend a few minutes sketching pseudocode, API shapes, and tests. That planning pays off at refactor checkpoints.

- Prioritize correctness & maintainability: ICF graders prefer testable, modular solutions over micro-optimized spaghetti code.

- Practice refactoring: Do exercises where you are given working-but-smelly code and must clean it up while preserving behavior.

- Environment & tooling: Know your language version and how the vendor’s runner executes code (example practice env: Python 3.10.6 and a test_simulation.py harness).

- Time management: For GCA-style tests, allocate time: Q1/Q2 fast, Q3 implement reliably (bashing allowed), Q4 give yourself an algorithmic plan targeting optimal complexity. Hashmaps are common helpers; DP is often not required for typical Q4 items.

- Unit tests: Run tests frequently; passing tests beats unoptimized perfection.

- Typing speed: Practice typing and small refactors to reduce edit latency during the timer.

Scoring practical rules

A transparent scoring model reduces disputes, improves grader consistency, and makes assessment outputs actionable.

What a good scoring model measures

A complete ICF scoring model typically combines these components (examples and rationale):

- Correctness & feature completion (40%) - passing the core tests and implementing the required feature set. This is the largest single signal of immediate functional ability.

- Tests & quality (20%) - unit/integration tests, test coverage for edge cases, test structure that makes changes safer.

- Design & architecture (15%) - appropriate use of patterns, modularity, separation of concerns, and readability of abstractions.

- Refactoring & incremental work (10%) - ability to evolve existing code, preserve behavior, and improve structure (this separates “fresh implementations” from engineers who can maintain codebases).

- Documentation & communication (5%) - README, clear API docs, and short notes explaining trade-offs.

- Non-functional attributes & compliance checkpoints (5%) - security, auditability, performance considerations, or domain-specific requirements (PHI, logging) as required by the role.

- Time management & process signals (5%) - evidence of planning, incremental commits, and passing tests frequently.

(Weights are illustrative, they should be adapted per role. The weights above total 100%.)

Common pitfalls & fixes

- Pitfall: Using a single score to decide hires.

Fix: Combine work-simulation results with structured interviews and role-fit evidence. - Pitfall: Rewarding micro-optimizations in short sessions.

Fix: Prioritize maintainability, correctness, testability—treat micro-optimizations as optional unless role-specific. - Pitfall: Ignoring documentation and accessibility.

Fix: Include a short README, test cases, and API docs in the rubric—these are low-effort signals with high payoff.

More real world example case studies

Case study 1: How one Healthtech company used an ICF to cut hiring time in half

Example: a 250-engineer health-tech firm aligned their ICF to Django/Python and HIPAA requirements and replaced a lengthy, four-stage interview funnel with a validated coding simulation plus a 60-minute technical interview. Outcomes after the pilot:

- Time-to-hire: from 8 - 4 weeks.

- First-year turnover for mid-level hires: down nearly 50%.

Why it worked: the multi-level simulation included a short compliance prompt - designing an audit trail - which reliably exposed candidates who didn’t understand healthcare audit or PHI-handling needs. Those candidates were screened out earlier, reducing later mismatches.

Practical tip for publishing results: when you share case metrics, annotate them with which ICF level(s) caused each selection or attrition decision (for example, “Level 2 compliance checkpoint removed X% of candidates” or “Failures at Level 3 predicted early attrition”). That mapping makes the evidence actionable for other teams.

Case study 2: Fintech scale-up improved hires & ramp with a role-specific ICF

A 120-engineer fintech replaced a 5-stage interview funnel with a role-specific ICF (Node/React + PCI/security checkpoints) plus a 45-minute technical interview. Pilot outcomes after three months:

- Interview - offer ratio: improved from 6:1 - 3:1 (fewer interviews per hire).

- Time-to-ramp: shortened from 12 - 8 weeks (about one-third faster).

- 6-month success rate: proportion of hires meeting manager expectations rose noticeably (management-reported improvement).

Why it worked: the ICF included role-specific modules (API reliability, payment-edge cases, and security checks) and awarded partial credit for incremental, test-backed progress. Automated test checkpoints caught basic correctness early; human graders focused on design, refactor quality, and security reasoning — reducing false positives that passed algorithmic screens but failed in production contexts.

Practical tip: when publishing results, annotate which ICF level predicted which outcome — e.g., “Pass/fail at Level 1 predicted baseline correctness; failures at Level 3 (refactor & security prompt) correlated with slower ramp and early performance issues.” That mapping helps other teams decide which checkpoints to prioritize for their roles.

Quick checklist for choosing a vendor/framework

Use this quick checklist to evaluate vendors on validation, transparency, role fit, reporting, and compliance, so you pick a defensible, actionable ICF.

FAQs

How long do assessments usually take?

ICF-style project tasks commonly use a 60–90 minute single sitting; GCA commonly uses 70 minutes for four algorithmic problems.

How are ICF Levels structured?

Four levels — initial design (Level 1), data-processing (Level 2), refactoring & encapsulation (Level 3), and extending design & final features (Level 4). Each builds on the last.

How should I split time on a GCA?

Move fast on Q1/Q2, implement reliably on Q3, and allocate focused planning + optimal-complexity effort to Q4. Use hashmaps and linear-time tactics when applicable.

Are ICF assessments biased against self-taught devs?

Not inherently. Good frameworks are language-agnostic and emphasize system design and reasoning. Transparency and practice tasks reduce bias.

What about retakes and score validity?

Vendor policies vary; common patterns include limited retakes (e.g., max two in 30 days, max three in 6 months) and result-age rules. Always publish the vendor retake policy and scoring schema date.

Will AI replace assessments or interviews?

AI changes workflows (auto-scoring, proctoring, candidate assistance) but does not replace valid, job-aligned tasks. Use AI to augment grading and design, not replace human calibration.

Final words: where to start this week

- If you’re hiring: pick a validated framework brief as your baseline, run a 2-role pilot, and compare time-to-hire for the first three pilot hires.

- If you lead engineering: embed a compliance checkpoint for roles that touch user data and run a grader calibration session with at least two anchor responses.

If you’re prepping as a candidate: practice 90-minute project tasks with an API, persistence, tests, and a README. Focus on passing tests and explaining trade-offs.

%2C%20Color%3DOriginal.png)