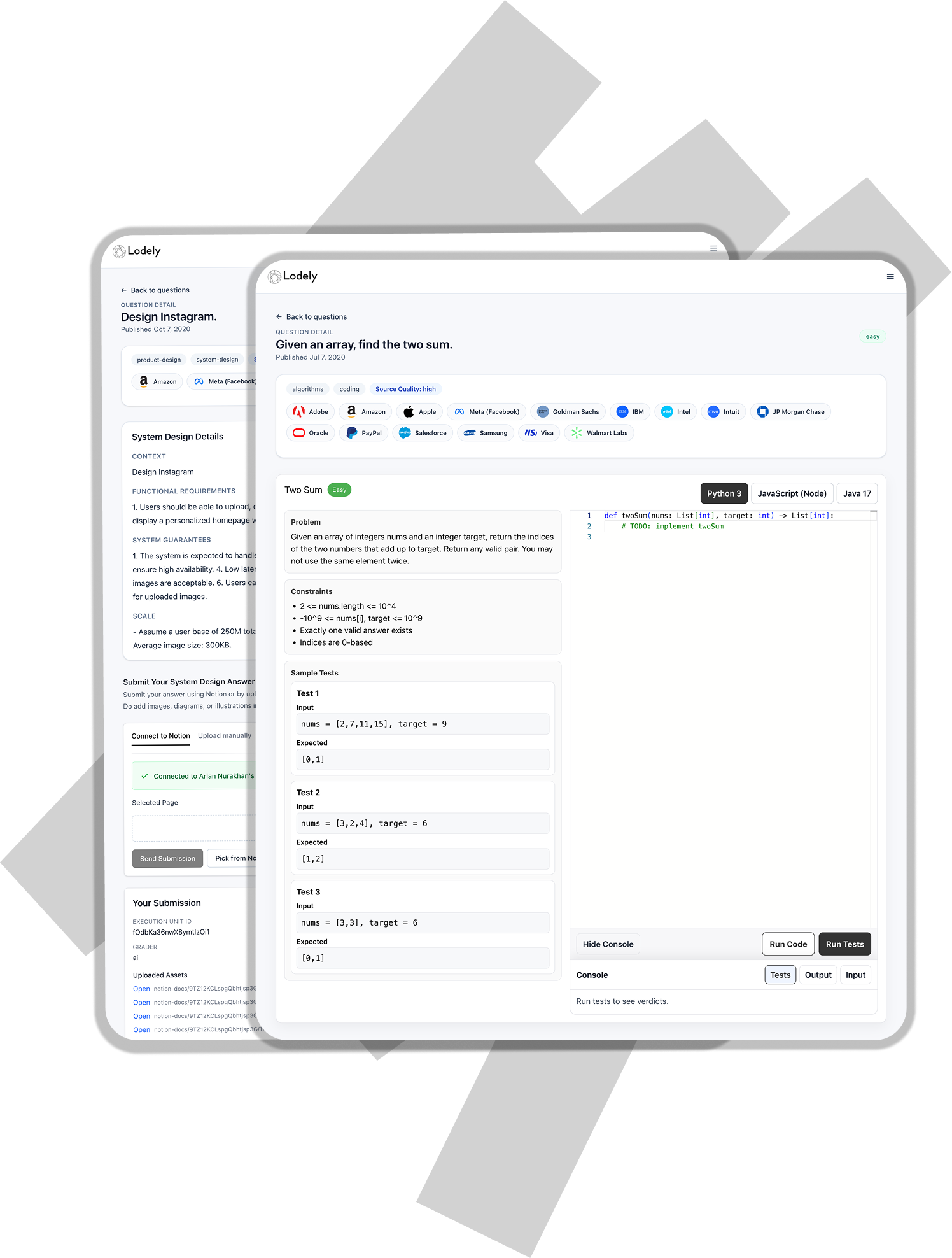

Atlassian Online Assessment: Questions Breakdown and Prep Guide

Thinking about “Cracking Atlassian’s Assessment” but not sure what to expect? You’re not alone. Engineers see timed coding tasks, debugging, sometimes a bit of system design-lite — and the hardest part can be figuring out which patterns to train.

If you want to walk into the Atlassian Online Assessment (OA) confident and prepared, this guide is your playbook. No fluff. Real breakdowns. Strategic prep.

When to Expect the OA for Your Role

Atlassian’s OA timing and content vary by role and level, but the patterns are consistent.

- New Grad & Intern Positions – Most candidates receive an OA within 1–2 weeks of recruiter contact. It’s often the primary technical screen before onsites.

- Software Engineer (Backend / Full-Stack / Frontend / Mobile) – Standard practice. Expect a link to a platform like HackerRank or CodeSignal with 2–3 coding problems and occasional debugging tasks.

- Data / Analytics / SRE / Security – You’ll likely still see an OA, with domain twists: log parsing, SQL/ETL, rate limiting, or network/event processing.

- Senior & Staff Roles – Some pipelines skip a generic OA and move to live coding or a work-sample/code-pair, but many candidates still report an OA used as a first filter.

- Non-Engineering (PM, Design) – Rare. When it happens, it’s usually analytical reasoning or lightweight product/case prompts rather than coding.

Action step: When you get the recruiter email, ask: “What’s the expected format of the OA for this role?” You’ll usually get the platform, number of questions, and time limit — enough to simulate it exactly.

Does Your Online Assessment Matter?

Short answer: yes — more than you might expect.

- It’s a gatekeeper. A strong resume gets you invited, but your OA score determines whether you move forward.

- It sets interview direction. Your OA code may be referenced in later rounds to shape follow-up questions.

- It mirrors Atlassian work. Expect scenarios that feel like Jira/Confluence/Bitbucket contexts: dependencies, permissions, pagination, search, and rate limiting.

- It signals craftsmanship. Clear naming, edge-case handling, tests (when allowed), and time/space trade-offs all matter.

Pro Tip: Treat the OA like a first-round interview. Write clean code, handle edge cases, and communicate intent in comments when appropriate.

Compiled List of Atlassian OA Question Types

These problem types map closely to Atlassian-style scenarios. Practice them:

- Top K Frequent Elements — type: Hash Map / Heap

- Course Schedule (Dependency Resolution) — type: Graph / Topological Sort

- Design LRU Cache — type: Design / Hash + Linked List

- Merge Intervals — type: Intervals / Sorting

- Meeting Rooms II — type: Intervals / Heap

- Logger Rate Limiter — type: Design / Hashing

- Design Hit Counter — type: Queue / Sliding Window

- Search Suggestions System — type: Trie / Sorting

- Flatten Nested List Iterator — type: Iterator / Stack

- Serialize and Deserialize Binary Tree — type: Tree / BFS-DFS

- Network Delay Time — type: Graph / Dijkstra

- Basic Calculator II — type: String Parsing / Stack

- K Closest Points to Origin — type: Heap / Quickselect

- Implement Trie (Prefix Tree) — type: Trie / Design

How to Prepare and Pass the Atlassian Online Assessment

Think of your prep as a mini training camp. You’re not memorizing — you’re building reflexes.

1. Assess Your Starting Point (Week 1)

- Take a timed mock on HackerRank/CodeSignal to baseline speed and accuracy.

- Identify weak categories: graphs (topo sort), intervals, tries, heaps, parsing.

- Pick a primary language and commit — know its I/O, collections, priority queues, and regex cold.

2. Pick a Structured Learning Path (Weeks 2-6)

- Self-Study on LeetCode/HackerRank

- Best for disciplined learners. Build a 50–70 question plan covering arrays, hashing, sliding window, graphs, tries, and intervals.

- Timed Mock Assessments

- Simulate Atlassian-style OAs: 90 minutes, 2–3 problems, one medium-hard.

- Mentor or Career Coach

- A reviewer can spot clarity/complexity issues and refine your pacing and problem selection.

3. Practice With Realistic Problems (Weeks 3-8)

- Create “kits” around Atlassian-like themes:

- Dependencies & workflows: topological sort, DAG checks, cycle detection.

- Permissions & hierarchy: trees, DFS/BFS, lowest common ancestor.

- Search & suggestions: trie prefix operations, sorting + binary search for pagination.

- Rate limiting & logging: sliding window, counters, queues.

- After solving, refactor for readability and re-solve 48 hours later to cement patterns.

4. Learn Atlassian-Specific Patterns

Because Atlassian builds collaboration tools (Jira, Confluence, Bitbucket, Trello), expect:

- Issue dependencies, sprint planning, and scheduling conflicts (topo sort, intervals).

- Permission inheritance and group membership (tree/graph traversal).

- Search with filters and suggestions (tries, prefix match, ranking).

- Pagination and stable ordering for long lists (binary search on pages, offset handling).

- Rate limiting, deduplication, and idempotency for APIs (sliding window/token bucket).

- For frontend/mobile: component state, diff/merge logic, offline sync conflict resolution.

5. Simulate the OA Environment

- Use the expected platform (CodeSignal/HackerRank) and mirror constraints: time limit, language, no external libraries unless standard.

- Practice copyless coding: minimize time spent on boilerplate by creating snippets beforehand.

- Turn off distractions and run full-timed sessions to calibrate pacing.

6. Get Feedback and Iterate

- Post solutions for review or compare against top solutions. Track repeated mistakes (off-by-one, edge-case misses, N+1 in loops).

- Keep a “gotchas” log and review it the night before the OA.

- Measure: target passing all visible tests in 25–35 minutes per medium problem.

Atlassian Interview Question Breakdown

Here are featured sample problems inspired by Atlassian-style work. Master these patterns and you’ll cover most OA ground.

1. Resolve Issue Dependencies (Topological Sort)

- Type: Graph / DAG / Kahn’s Algorithm

- Prompt: Given issues and dependency pairs (A depends on B), return a valid ordering to complete all issues or detect a cycle.

- Trick: Use in-degree tracking with a queue; prefer stable ordering (lexicographic) when asked to keep results predictable.

- What It Tests: Graph fundamentals, cycle detection, queue usage, and handling multiple starting nodes.

2. Design an API Rate Limiter with Burst Control

- Type: Design / Sliding Window or Token Bucket

- Prompt: Implement a limiter allowing N requests per user per window, with short bursts allowed up to B tokens.

- Trick: Use token bucket or deque of timestamps per user; prune efficiently to keep O(1) amortized operations.

- What It Tests: Practical systems thinking, time-window math, memory/perf trade-offs, and clean API boundaries.

3. Permission Inheritance in Project Hierarchy

- Type: Tree / DFS-BFS / Sets

- Prompt: Given a project tree where permissions can be granted at any node and inherited by descendants, compute the effective permissions for a target node/user.

- Trick: Accumulate grants/denies top-down; handle overrides cleanly and avoid recomputing by memoizing along the path.

- What It Tests: Tree traversal, state propagation, handling conflicting rules, and data modeling clarity.

4. Sprint Capacity Planning (Choose Issues Under Capacity)

- Type: Dynamic Programming / Knapsack

- Prompt: Select a subset of issues with story points and values to maximize value under team capacity.

- Trick: Use 1D DP for optimization; if constraints are large, discuss greedy vs. DP trade-offs and approximation.

- What It Tests: Optimization under constraints, DP patterns, and communicating trade-offs like complexity vs. accuracy.

5. Flatten Comment Threads with Stable Pagination

- Type: Tree -> List / BFS / Ordering

- Prompt: Given nested comments (replies), output a flattened list in breadth-first order with stable timestamps, and support fetching page k of size m.

- Trick: BFS with tie-breaking (timestamp or ID); compute start index safely and guard against partial last pages.

- What It Tests: Traversal, ordering guarantees, pagination math, and edge-case rigor.

What Comes After the Online Assessment

Passing the Atlassian OA is the opener. Next, you’ll shift from “can you code” to “can you collaborate, design, and ship.”

1. Recruiter Debrief & Scheduling

You’ll hear back within a few days if you pass. Expect high-level feedback and an outline of next rounds. Ask about format (code-pair vs. whiteboard), interviewers’ focus areas, and any role-specific prep.

2. Live Technical Interviews (Code Pair)

You’ll collaborate with an engineer in a shared editor. Expect:

- Algorithm/Data Structure problems similar to the OA, but conversational.

- Debugging/refactoring a code snippet with failing tests.

- Clear communication: narrate approach, verify assumptions, write tests if allowed.

Pro tip: Review your OA submissions. Interviewers sometimes say, “Walk me through your solution and what you’d change.”

3. System Design / Architecture Round

For mid-level and above, plan for a 45–60 minute design session. Prompts often resemble:

- Design a simplified issue search with filters, ranking, and pagination.

- Build a permission system for projects, groups, and users.

- Architect rate-limited, idempotent REST endpoints for bulk updates.

They’re looking for decomposition, trade-offs (consistency, caching, indexing), failure handling, and pragmatic scope control.

4. Behavioral & Values Interviews

Atlassian values matter. Expect prompts like:

- “Tell me about a time you simplified a process for the team.”

- “Describe a decision you made that put the customer first.”

- “When did you challenge the status quo and what happened?”

Map your stories to values such as open communication, customer empathy, teamwork, and being a change agent. Use STAR: Situation, Task, Action, Result.

5. Cross-Functional or Role-Specific Deep Dives

Depending on the team:

- Frontend: component state, accessibility, performance, and API integration.

- Backend: data modeling, API contracts, consistency, and observability.

- SRE: incident response, SLIs/SLOs, on-call pragmatism, and runbooks.

- Data: SQL, modeling, ETL trade-offs, and data quality checks.

Bring product sense: tie technical choices to user outcomes.

6. Offer & Negotiation

If you pass the loop, expect a recruiter call followed by a written offer with base, equity, and often a bonus. Research market ranges for your location and level, and negotiate respectfully with data.

Bottom line: The OA gets you in the door. The loop evaluates how you think, build, and collaborate. Prepare for algorithms, yes — but also for design, communication, and values alignment.

Conclusion

You don’t need to guess. You need to train with intent. If you:

- Benchmark early and target weak areas,

- Practice Atlassian-style problems under timed conditions,

- Write clear, robust code and explain trade-offs,

you’ll turn the OA from a hurdle into an advantage. Collaboration tools demand pragmatic engineering — show that in your OA and every round that follows.

For more practical insights and prep strategies, explore the Lodely Blog or start from Lodely’s homepage to find guides and career resources tailored to software engineers.

✉️ Get free system design pdf resource and interview tips weekly

✉️ Get free system design pdf resource and interview tips weekly