Databricks Online Assessment: Questions Breakdown and Prep Guide

Wondering how to prep for Databricks’ OA without overtraining on the wrong stuff? You’re in the right place. Candidates report a mix of DSA questions plus big-data flavored twists — think streaming, joins, partitioning, and correctness at scale. If you want a focused plan and realistic expectations, this guide is your playbook. No fluff. Real breakdowns. Strategic prep.

When to Expect the OA for Your Role

Databricks tunes the OA by role and level. Expect differences in platform, number of questions, and whether SQL/data concepts show up.

- New Grad & Intern

- Common. You’ll often receive a HackerRank or CodeSignal link shortly after recruiter contact.

- Typically 2–3 coding problems. Languages like Python, Java, C++, or Scala allowed.

- Software Engineer (Backend / Platform / Full-Stack)

- Standard OA with algorithmic questions. Some problems reflect distributed systems constraints (streaming, k-way merge, memory limits).

- Data / ML / Data Infrastructure

- Expect DSA plus data-centric twists: aggregations, windowing, joins, and sometimes SQL. Knowledge of Spark concepts can help even if you won’t run Spark in the OA.

- Site Reliability / Security / Systems

- Similar OA plus occasional parsing, scheduling, or concurrency-flavored problems.

- Senior Roles

- Mixed reports. Some skip the OA and go straight to live rounds; others still gate with an OA. Ask your recruiter.

- Non-Engineering Roles

- Rare. If present, typically logic, analytics, or SQL (for analytics-heavy roles).

Action step: Ask your recruiter for the platform, number of questions, time limit, and whether SQL or data-specific content is included for your role.

Does Your Online Assessment Matter?

Yes — more than most candidates assume.

- It gates the loop. Passing the OA is the primary hurdle to reach live interviews.

- It’s a code sample. Interviewers may review your OA code to shape later questions.

- It tests “scale thinking.” Databricks cares about correctness, complexity, and how you reason about memory/disk trade-offs and streaming-like constraints.

- It reveals engineering habits. Clean code, naming, tests/examples, and edge-case handling all count.

Pro tip: Treat the OA as your first interview. Write production-quality code and include brief comments that explain invariants and complexity choices.

Compiled List of Databricks OA Question Types

Candidates report seeing a mix of classic DSA and data-at-scale patterns. Practice these:

- Merge K Sorted Lists — type: Heap / K-way Merge

- Top K Frequent Elements — type: Heap / Hash Map

- Sliding Window Maximum — type: Deque / Sliding Window

- Minimum Window Substring — type: Sliding Window / Hash Map

- Subarray Sum Equals K — type: Prefix Sum / Hash Map

- Kth Largest Element in an Array — type: Quickselect / Heap

- Find Median from Data Stream — type: Two Heaps / Streaming

- Course Schedule — type: Graph / Topological Sort

- Redundant Connection — type: Union-Find / Graph

- Merge Intervals — type: Sorting / Greedy

- Group Anagrams — type: Hashing / Strings

- LRU Cache — type: Design / LinkedHashMap

- Evaluate Division — type: Graph / Weighted Edges

- Department Top Three Salaries — type: SQL / Windowing

- Trips and Users — type: SQL / Joins & Aggregations

- Optional read: Skew join strategies — concept: Data Skew / Join Optimization

Note: You won’t run Spark in the OA, but patterns like k-way merge, streaming medians, and heavy-hitter detection mirror lakehouse realities.

How to Prepare and Pass the Databricks Online Assessment

1. Assess Your Starting Point (Week 1)

Identify strengths (arrays, hashing, heaps) and gaps (graphs, sliding windows, SQL). Take a timed set of 2–3 medium problems to benchmark. For data roles, add a small SQL drill (joins, window functions).

2. Pick a Structured Learning Path (Weeks 2-6)

- Self-study (LeetCode, HackerRank, CodeSignal practice)

- Best if you’re disciplined; focus on mediums with occasional hards in heaps, graphs, and sliding window.

- Mock assessments / proctored sims

- Useful to calibrate pacing and reduce OA anxiety.

- Mentor or coach

- A software engineer career coach can critique your solutions, complexity choices, and communication.

3. Practice With Realistic Problems (Weeks 3-8)

Build a 40–60 problem set prioritizing: heaps (top-K, streaming), hash maps + prefix sums, sliding windows, graph traversal, and interval merging. For data roles, add 10–15 SQL problems focused on joins, partitions, and window aggregates. Always time yourself and refactor for clarity.

4. Learn Databricks-Specific Patterns

Expect ideas that map to lakehouse and streaming work:

- K-way merge and external sort patterns

- Top-K and heavy-hitters (heap or count-sketch style thinking)

- Deduplication and idempotency within a time window

- Sessionization and window aggregations

- Join strategy trade-offs (shuffle vs broadcast) and skew handling

- Memory vs compute trade-offs; streaming vs batch semantics

- File format awareness (Parquet/Delta) at a conceptual level

5. Simulate the OA Environment

- Use a coding platform’s IDE and disable all distractions.

- Enforce the OA duration (often ~90 minutes for 2–3 problems).

- If SQL is likely, practice locally with SQLite/DuckDB or a free Spark notebook in local mode, but write portable SQL without vendor quirks.

6. Get Feedback and Iterate

Do short retros after each session:

- What cost me time? (parsing, edge cases, off-by-one)

- Did I pick the optimal data structure early?

- Is my code readable and testable? Fix recurring issues deliberately in the next 3–5 problems.

Databricks Interview Question Breakdown

Below are sample problems that mirror Databricks-style thinking. Use them to practice both coding and “scale” reasoning.

1. External K-Way Merge for Huge Sorted Chunks

- Type: Heap / File-Processing Pattern

- Prompt: You’re given K sorted arrays representing pre-sorted chunks from disk. Merge them into one sorted array.

- Trick: Use a min-heap seeded with the first element of each list; push-pop keeps O(n log K). Don’t do sequential pairwise merges (O(Kn)).

- What It Tests: Heap fluency, understanding of external sort patterns, and memory-aware merging.

2. Top-K Frequent Keys in a Log Stream

- Type: Heap / Hash Map / Streaming

- Prompt: Given a stream of string events, return the K most frequent keys at the end.

- Trick: Count with a hash map, then use a size-K min-heap, or use bucket sort when K is large and key cardinality is constrained.

- What It Tests: Time vs space trade-offs, streaming mindset, and heap correctness.

3. Deduplicate Events Within a Time Window

- Type: Sliding Window / Hash Map

- Prompt: Each event has id and timestamp. Return the list with duplicates removed if they occur within T units of a previous occurrence of the same id.

- Trick: Maintain a map id → lastSeenTimestamp; only accept an event if now - lastSeen > T. If input isn’t sorted, sort by timestamp first.

- What It Tests: Idempotency logic, windowing patterns, and edge cases (equal timestamps, boundaries).

4. Sessionize Clickstream and Compute Session Durations

- Type: Sorting / Two Pointers / Aggregation

- Prompt: For each user’s sorted events, break into sessions where gaps > G start a new session. Compute total duration per session.

- Trick: Walk each user’s events linearly; track session start and last event time. Duration is last - first (or include per-event durations if provided).

- What It Tests: Real-world analytics transformations, careful boundary handling, and scalable linear passes.

5. Connected Components with Heavy Nodes

- Type: Graph / Union-Find

- Prompt: Given an undirected graph, return its connected components. Some nodes are “heavy” (high degree). Ensure your approach remains efficient.

- Trick: Union-Find with path compression and union by rank gives near-linear performance; avoid naive adjacency scans for each node.

- What It Tests: Graph fundamentals and performance sensitivity to skewed degrees.

What Comes After the Online Assessment

Passing the OA gets you into the real evaluation: your ability to build, debug, and design systems that handle large-scale data.

1. Recruiter Debrief & Scheduling

Expect a quick sync if you pass. Ask about upcoming rounds: number of interviews, expected focus (algorithms vs design), and whether to prepare for SQL or data-intensive scenarios.

2. Live Technical Interviews

You’ll code in a shared editor with one or two engineers.

- Algorithmic questions similar to the OA, but interactive.

- Reasoning aloud is key: narrate trade-offs and edge cases.

- Short debugging or refactoring exercises may appear.

Tip: Review your OA code — interviewers sometimes ask you to walk through it and discuss alternatives.

3. System Design / Data Architecture Interview

For mid-level and senior roles, expect a 45–60 minute design session. Common prompts:

- Build a reliable ingestion pipeline from object storage to a lakehouse table (think Delta-like semantics).

- Design a streaming aggregation with exactly-once processing and late data handling.

- Scale a feature store or metadata/search service.

What they evaluate:

- How you break down ingestion, storage, compute, and serving layers

- Partitioning, indexing, and join strategies; dealing with skew

- Consistency and fault tolerance (idempotency, retries, checkpoints)

- Cost-awareness and operability (backfills, schema evolution, monitoring)

4. Behavioral & Collaboration Interviews

Expect questions about ownership, reliability, and teamwork across product and data stakeholders.

- “Tell me about a time you fixed a production issue under time pressure.”

- “Describe a technical decision where you balanced performance, cost, and simplicity.” Use STAR and quantify impact. Highlight data-informed decisions and cross-functional communication.

5. Final Round / Virtual Onsite

A multi-interview block combining:

- Additional coding (often medium/hard)

- Deep-dive design or architecture

- Cross-functional alignment (PM/data partner) depending on team

Pace yourself for several hours and take brief resets between sessions.

6. Offer & Negotiation

Comp packages typically include base, bonus, and equity. Ask about refresh cycles, leveling, and team roadmap. Come prepared with market comps and clarify location or remote impact on compensation.

Conclusion

You don’t need to guess; you need to train for the right patterns. If you:

- Benchmark honestly and target your weak areas,

- Practice heaps, sliding windows, prefix sums, graphs, and data-flavored problems under time,

- Write clean, testable code and explain scale-aware trade-offs,

you’ll turn the Databricks OA from a hurdle into a strong first impression. Big data experience helps, but disciplined problem solving — and clear communication — is what gets you to the loop.

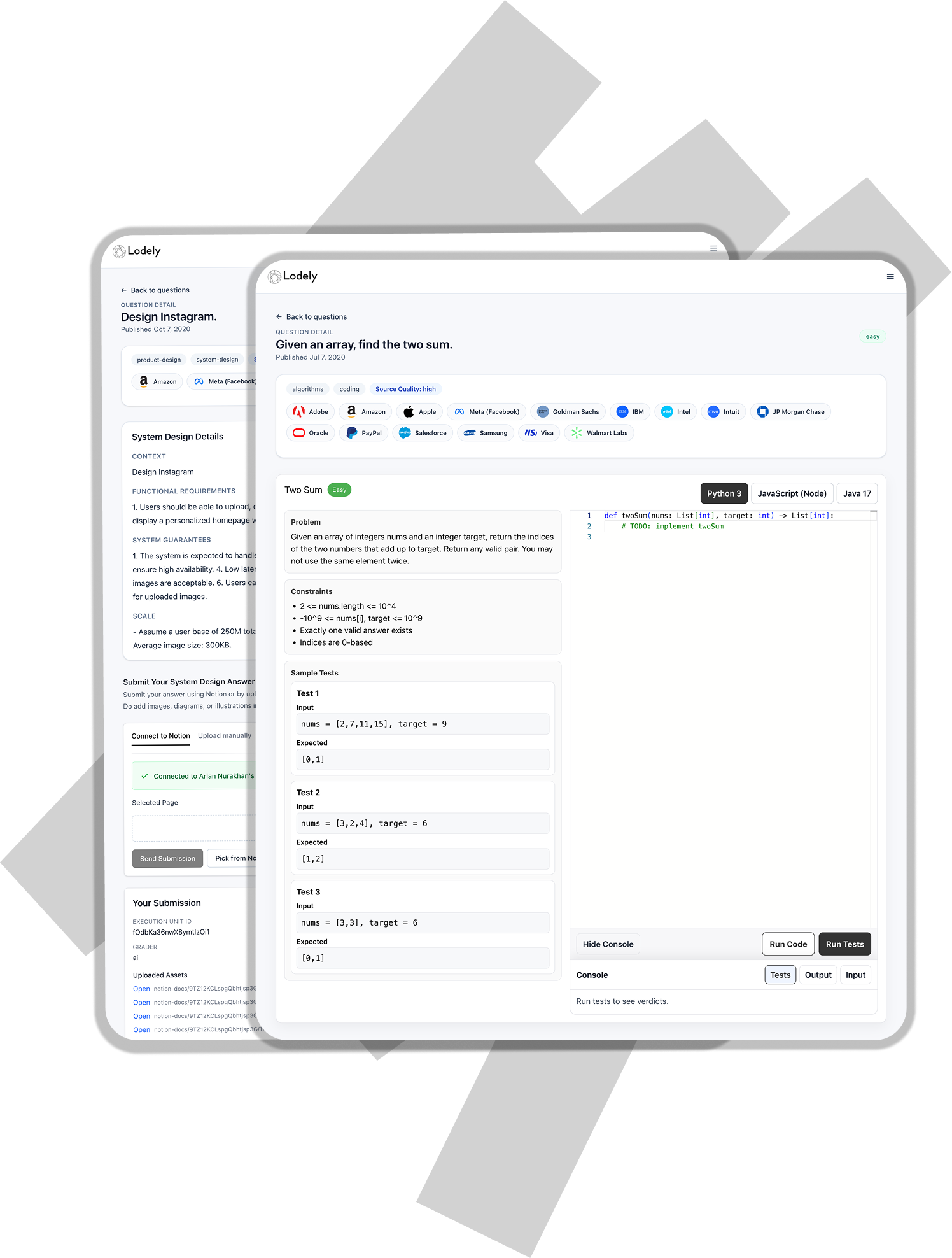

For more practical insights and prep strategies, explore the Lodely Blog or start from Lodely’s homepage to find guides and career resources tailored to software engineers.

✉️ Get free system design pdf resource and interview tips weekly

✉️ Get free system design pdf resource and interview tips weekly