OpenAI Online Assessment: Questions Breakdown and Prep Guide

Thinking about “Cracking OpenAI’s Assessment” but not sure what’s inside? You’re not alone. Engineers see CodeSignal/HackerRank links, timed questions, and domain-flavored puzzles — and the hardest part is knowing which patterns to drill.

If you want to walk into the OpenAI Online Assessment (OA) confident, focused, and ready to execute, this guide is your playbook. No fluff. Real breakdowns. Strategic prep.

When to Expect the OA for Your Role

OpenAI doesn’t ship a one-size-fits-all assessment. Timing and content depend on role, level, and team.

- New Grad & Intern (SWE/Research Engineer)

- Most candidates receive an OA within a week or two after an initial recruiter touchpoint. Often the primary technical screen before onsite loops.

- Software Engineer (Product/Infra/Platform)

- Standard practice. Expect a CodeSignal or HackerRank link. Focus is DSA with pragmatic systems-flavored twists (throughput, latency, correctness).

- Research Engineer / Applied AI Engineer

- Expect general coding questions plus occasional math/numerical stability, data parsing, or “ML-adjacent” tasks (vector ops, streaming, top-k).

- Safety/Evals/Trust

- OA still coding-heavy, with potential parsing, filtering, and rule-engine style challenges; sometimes log/event sequencing.

- Senior/Staff+

- Some pipelines skip a generic OA in favor of take-homes or live coding/system design. Many reports still show an OA as a first filter.

Action step: As soon as you hear from a recruiter, ask: “What’s the expected format for this role’s OA? Platform, language constraints, duration, and number of questions?” You’ll usually get at least the platform and timing.

Does Your Online Assessment Matter?

Short answer: yes — more than you might think.

- It’s the main filter. A strong CV opens the door, but your OA performance decides the next round.

- It sets the tone. Your OA code may be referenced by interviewers later to guide conversation.

- It mirrors the work. OpenAI emphasizes correctness, performance, clarity, and edge cases under real constraints.

- It signals engineering habits. Clean structure, naming, tests, and reasoning about complexity all matter.

- It rewards numerical care. Handling precision, overflows, and stable computations is a plus for ML-adjacent roles.

Pro Tip: Treat the OA like your first interview. Write readable, tested code; communicate assumptions in comments; handle edge cases.

Compiled List of OpenAI OA Question Types

Candidates commonly report the following categories. Practice them:

- Longest Substring Without Repeating Characters — type: Sliding Window / Hashing

- Top K Frequent Elements — type: Heap / Counting

- Find Median from Data Stream — type: Two Heaps / Streaming

- Logger Rate Limiter — type: Caching / Time-based Window

- Design Hit Counter — type: Queue / Rolling Window

- Consistent Hashing (primer) — type: Distributed Systems / Hash Ring

- Merge k Sorted Lists — type: Heap / Divide & Conquer

- Network Delay Time — type: Graph / Dijkstra

- LRU Cache — type: Design / Doubly Linked List + Hash Map

- Merge Intervals — type: Sorting / Interval Merging

- Edit Distance — type: Dynamic Programming

- Dot Product of Two Sparse Vectors — type: Sparse Data / Hash Maps

- Linked List Random Node — type: Reservoir Sampling / Probability

- Softmax and Log-Sum-Exp (notes) — type: Numerical Stability / Math

How to Prepare and Pass the OpenAI Online Assessment

Treat prep like a structured training cycle. You’re building reflexes, not just memorizing answers.

1. Assess Your Starting Point (Week 1)

- Benchmark your speed/accuracy on arrays, hashing, strings, graphs, and heaps.

- Note gaps: DP comfort? streaming problems? math/numerics? parsing?

- Use LeetCode Explore cards or HackerRank tracks to calibrate difficulty and pacing.

2. Pick a Structured Learning Path (Weeks 2-6)

You have options:

- Self-study on LeetCode/HackerRank

- Best for disciplined learners. Build topic lists, enforce timers, track misses.

- Mock assessments / timed platforms

- Simulate CodeSignal/HackerRank conditions with proctoring and fixed clocks.

- Mentor or coach feedback

- A software engineer career coach can review code quality, test strategy, and pacing.

Language note: Many OpenAI candidates use Python for fast iteration. Be comfortable with heapq, collections, bisect, and writing quick unit tests. For infra-oriented roles, brush up on Go/C++ ergonomics and complexity.

3. Practice With Realistic Problems (Weeks 3-8)

- Build a 40–60 problem set focused on:

- Sliding window, heaps, graphs (Dijkstra/BFS), intervals, streaming statistics

- Caching (LRU/LFU), rate limiting patterns, k-way merge

- Sparse vector ops, top-k, and numerically stable computations

- Practice under a timer and refactor after solving for readability and edge cases.

4. Learn OpenAI-Specific Patterns

Expect domain-flavored twists like:

- Token accounting and context windows

- Interval merging, sliding windows, and capacity planning

- Streaming and backpressure

- Online algorithms, two heaps, bounded memory, chunked I/O

- Throughput/latency trade-offs

- Batching, amortized costs, cache hit rates, and queueing

- Numerical stability and precision

- Log-sum-exp, overflow avoidance, integer boundaries

- Safety/evals-style parsing

- Rule filters, trie/prefix checks, normalization, and deduplication

- Distributed thinking

- Consistent hashing, partitioning, and fault-aware reasoning

5. Simulate the OA Environment

- Use a CodeSignal/HackerRank practice environment.

- Pick your language and stick to it; set a strict timer (commonly 90–120 minutes for 2–4 tasks).

- Disable all distractions; practice reading dense prompts quickly and extracting constraints.

- Get used to writing your own tests in the provided runner.

6. Get Feedback and Iterate

- Track misses by category (off-by-one, overflow, timeouts, memory).

- Re-solve problem variants after a week to cement patterns.

- Share code with peers/mentors; aim for clarity, not just correctness.

OpenAI Interview Question Breakdown

Here are featured sample problems inspired by the patterns above. Practice them to cover most OpenAI OA ground.

1. Token Window Utilization in Streaming Output

- Type: Intervals / Greedy

- Prompt: Given a list of token spans already reserved in a context window and incoming spans to insert, compute the max additional tokens that can fit without overlap after merging.

- Trick: Sort by start, merge intervals, then subtract covered length from total capacity. Watch off-by-one boundaries.

- What It Tests: Interval merging accuracy, careful index math, and translating real constraints (context limits) into code.

2. Median and p95 Latency from a Data Stream

- Type: Two Heaps / Streaming

- Prompt: Maintain a data structure that supports adding latencies and querying median and approximate p95 in near real time.

- Trick: Use two heaps for median; for p95, consider a biased quantile sketch or bucket approximation under memory limits.

- What It Tests: Streaming stats, heap operations, memory/time trade-offs, and approximation reasoning.

3. Token Bucket Rate Limiter

- Type: Queue / Time-based Window

- Prompt: Implement a rate limiter that allows bursts up to capacity C and refills R tokens per second. Given timestamps and requests, decide which are allowed.

- Trick: Track last refill time and compute lazy refills. Avoid floating point drift; consider integer math.

- What It Tests: Time-window logic, numerical care, and production-minded correctness.

4. Top-K Cosine Similar Items over Sparse Vectors

- Type: Heap / Sparse Math

- Prompt: Given a query sparse vector and a set of sparse vectors, return the top-k by cosine similarity.

- Trick: Precompute norms; compute dot products using intersecting indices only; maintain a min-heap of size k.

- What It Tests: Sparse data structures, numerical stability, and heap-based selection.

5. Consistent Hashing for Sharded Key-Value Store

- Type: Distributed Systems / Hash Ring

- Prompt: Design a mapping from keys to nodes that minimizes remapping when nodes join/leave. Support get_node(key), add_node, remove_node.

- Trick: Use a hash ring with virtual nodes; binary search for successor on the ring. Handle wrap-around.

- What It Tests: System design fundamentals, hashing, and edge-case handling under topology changes.

What Comes After the Online Assessment

Passing the OpenAI OA earns your seat at the table. The next stages shift from “can you code under time?” to “can you reason, design, and collaborate on hard problems?”

1. Recruiter Debrief & Scheduling

You’ll typically hear back within a few days if you pass. Expect high-level feedback and a walkthrough of the upcoming rounds. Ask about interview composition (coding, systems, ML-adjacent), language preferences, and timelines.

2. Live Technical Interviews

Pair with engineers over video and a collaborative IDE. Expect:

- Algorithmic coding similar to the OA, but interactive

- Debug/extend an existing code snippet

- Clear narration of trade-offs and complexity

Pro tip: Review your OA solutions; interviewers sometimes ask you to walk through your approach.

3. Systems / ML Systems Design

For mid-level and senior roles, you may see a 45–60 minute design session. You might sketch:

- A low-latency inference service with batching and streaming

- An event pipeline for evaluations or safety filtering

- A caching/rate-limiting layer to protect downstream services

They’re looking for clarity on scale, failures, consistency, and pragmatic trade-offs.

4. Research/Deep-Dive Discussion (Role-Dependent)

Common for Research Engineer/Applied roles. Expect:

- Problem decomposition: setting up experiments, metrics, and iteration loops

- Numerical rigor: stability, precision, and data quality

- Translating research ideas into robust, testable code

Bring one or two concise project stories with concrete outcomes and learnings.

5. Behavioral & Collaboration Interview

Expect structured questions about ambiguity, iteration speed, and collaboration. Use STAR (Situation, Task, Action, Result). Highlight:

- Ownership and clarity under uncertainty

- Safety and reliability mindset

- Communication with cross-functional partners

6. Final Loop & Hiring Committee

The final loop may combine multiple interviews (coding, design, cross-functional). Focus on consistency across rounds: clear structure, crisp trade-offs, and calm execution.

7. Offer & Negotiation

If successful, you’ll receive verbal feedback followed by a written offer. Do market research, understand comp components (base, bonus, equity), and be ready to discuss priorities.

Conclusion

You don’t need to guess — you need a plan. The OpenAI OA is demanding but predictable. If you:

- Identify your weak areas early,

- Drill OpenAI-style patterns under time,

- Write clear, tested, numerically careful code,

you’ll turn the OA from a gatekeeper into a springboard. Focus on fundamentals, simulate the real environment, and carry that same clarity into the rounds that follow.

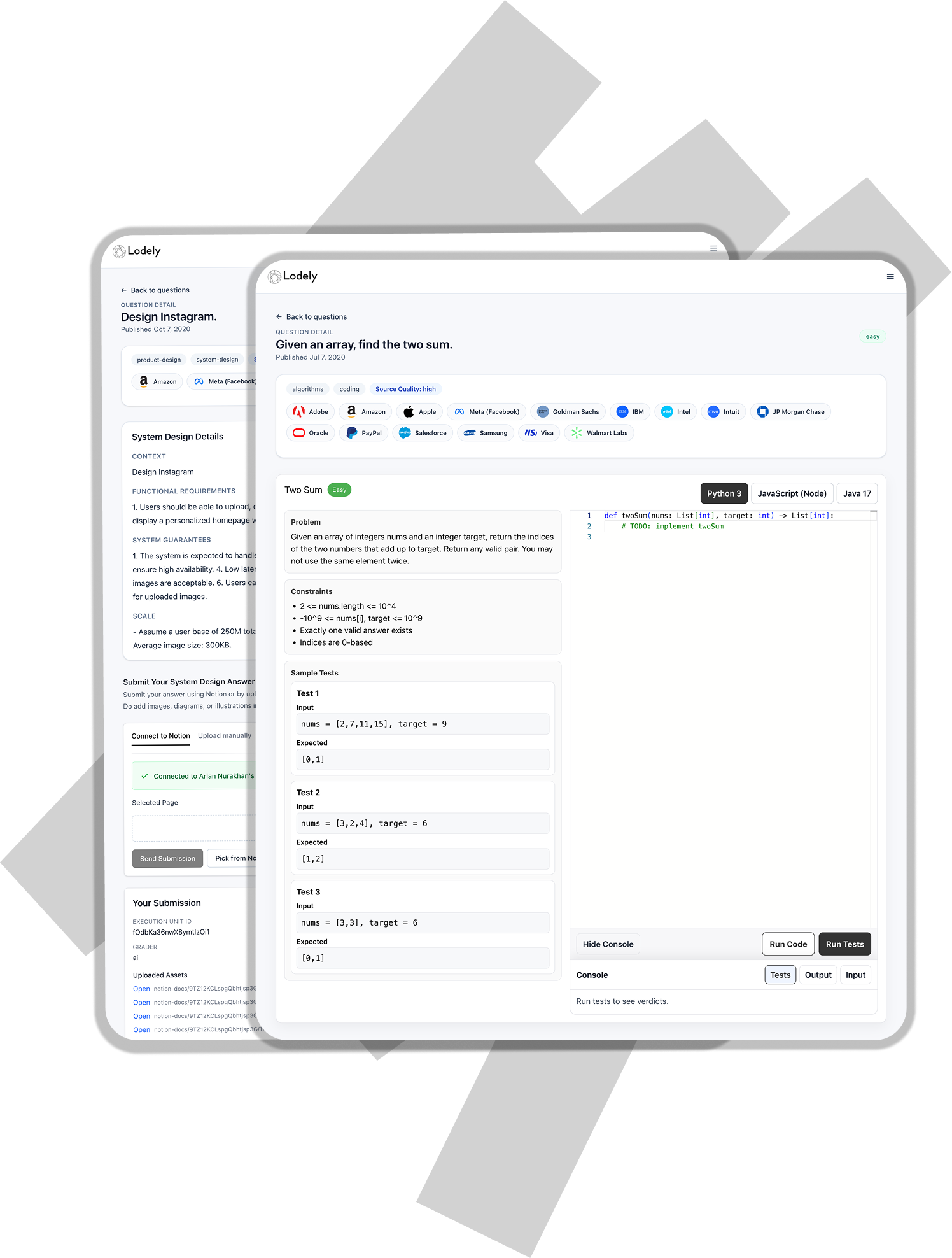

For more practical insights and prep strategies, explore the Lodely Blog or start from Lodely’s homepage to find guides and career resources tailored to software engineers.

✉️ Get free system design pdf resource and interview tips weekly

✉️ Get free system design pdf resource and interview tips weekly